[kubernetes]-helm部署高可用自愈mysql集群

helm使用operator部署高可用自愈mysql集群部署完第一天后 vmware磁盘满了导致有几台node崩了。。。可能截图会有ip对不上的问题。安装helm下载安装包https://github.com/helm/helm/releasescd/kubernetes/helmtar zxvf helm-v2.13.1-linux-amd64.tar.gzln -s /kubernetes/h

helm使用operator部署高可用自愈mysql集群

部署完第一天后 vmware磁盘满了 导致有几台node崩了。。。可能截图会有ip对不上的问题。

安装helm

下载安装包

https://github.com/helm/helm/releases

cd /kubernetes/helm

tar zxvf helm-v2.13.1-linux-amd64.tar.gz

ln -s /kubernetes/helm/linux-amd64/helm /usr/bin/

helm version

安装tiller

# 指向阿里云的仓库 Helm 默认会去 storage.googleapis.com 拉取镜像

helm init --client-only --stable-repo-url https://aliacs-app-catalog.oss-cn-hangzhou.aliyuncs.com/charts/

helm repo add incubator https://aliacs-app-catalog.oss-cn-hangzhou.aliyuncs.com/charts-incubator/

helm repo update

# 创建TLS认证服务端 因为官方的镜像无法拉取,使用-i指定镜像

helm init --service-account tiller --upgrade -i registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.13.1 --tiller-tls-cert /etc/kubernetes/ssl/tiller001.pem --tiller-tls-key /etc/kubernetes/ssl/tiller001-key.pem --tls-ca-cert /etc/kubernetes/ssl/ca.pem --tiller-namespace kube-system --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

# 创建serviceaccount

kubectl create serviceaccount --namespace kube-system tiller

# 创建角色绑定

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

验证

kubectl get deploy --namespace kube-system tiller-deploy -o yaml|grep serviceAccount

kubectl -n kube-system get pods|grep tiller

helm version

安装Presslabs MySQL Operator

Presslabs MySQL Operator架构图如下

下载相关charts到本地

git clone https://github.com/kubernetes/charts.git

部署Presslabs MySQL Operator

cd mysql-operator/charts/mysql-operator/

vi values.yaml

创建rabc-1.yaml

---

# 唯一需要修改的地方只有namespace,根据实际情况定义

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: operator #根据实际环境设定namespace,下面类同

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

namespace: operator

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: operator

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: operator

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: operator

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

创建nfs-StorageClass-2.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: presslabs-managed-nfs-storage

namespace: operator

provisioner: presslabs-mysql-nfs-storage #这里的名称要和provisioner配置文件中的环境变量PROVISIONER_NAME保持一致parameters: archiveOnDelete: "false"

helm安装presslabs/mysql-operator

# 添加源

helm repo add presslabs https://presslabs.github.io/charts

# 报错Error: failed to download "presslabs-mysql-operator" (hint: running `helm repo update` may help)

# helm repo update

# helm安装

# helm install presslabs/mysql-operator -f values.yaml --namespace operator

helm install presslabs/mysql-operator --name presslabs -f values.yaml --namespace operator --set rbac.enabled=true

调整values.yaml里replicas的值为3

# helm删除

# helm delete presslabs --purge

# 更新value

helm upgrade presslabs --values values.yaml presslabs/mysql-operator --namespace operator --set rbac.enabled=true

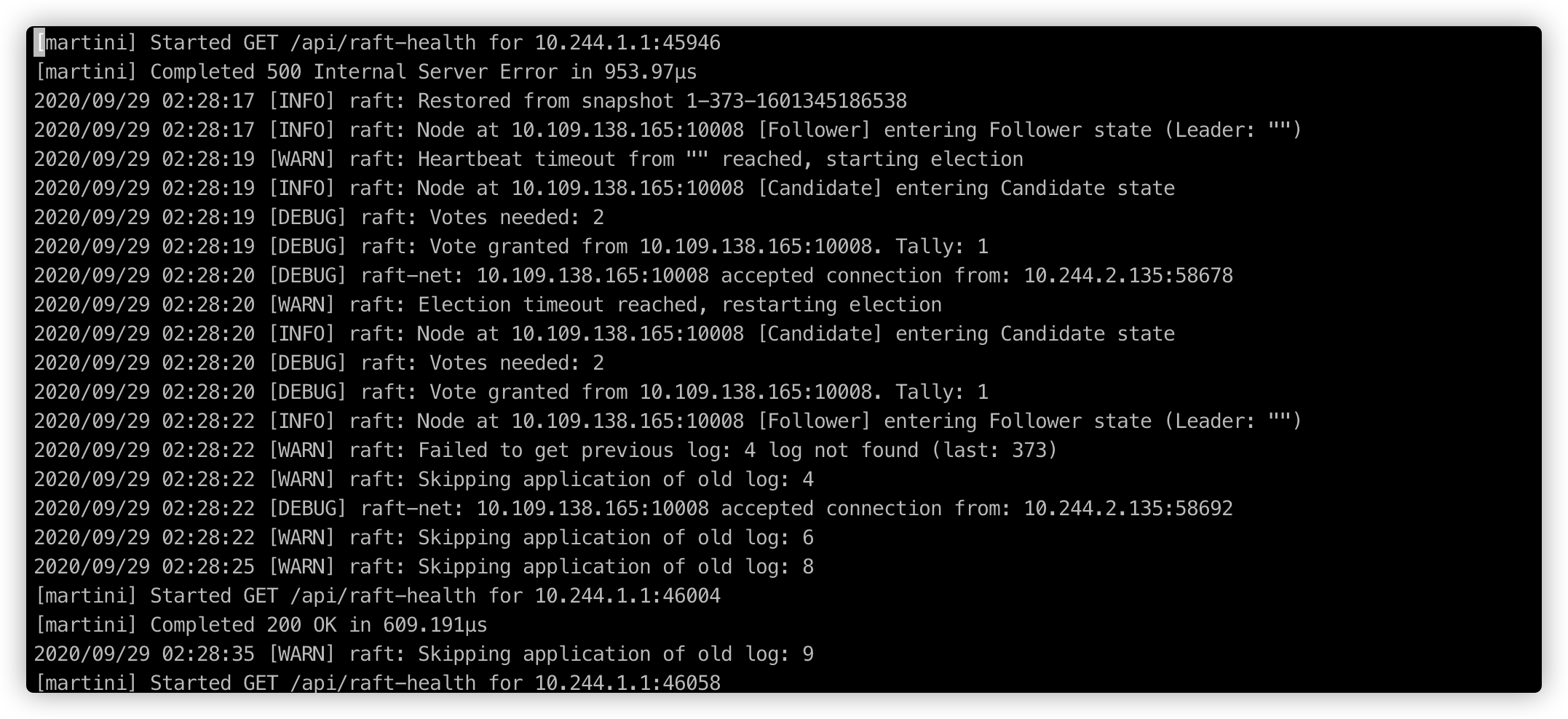

查看leader

# 需要看每个pod的日志 我这边是持久化了 看到被选举为leader 而刚好这个第二个pod 获得了2票

# 直接查看日志命令

kubectl log -f presslabs-mysql-operator-2 -c orchestrator -n operator

里面的operator容器和orchestrator容器都会有选主的过程,这里对应的应该是presslabs-mysql-operator-2, Operator POD在起来之后,其中给一个会成为leader leader的ip是svc的ip 会出现如下字样

2020/09/30 01:37:59 [INFO] raft: Election won. Tally: 2

2020/09/30 01:37:59 [INFO] raft: Node at 10.109.138.165:10008 [Leader] entering Leader state

# 查看对应svc的ip

kubectl get svc -o wide -n operator

创建ingress ingress-4.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

# 通过添加下面的annotations 来开启白名单

# 关闭80强制跳转443 为ingress配置增加注解(annotations):nginx.ingress.kubernetes.io/ssl-redirect: 'false' 就可以禁止http强制跳转至https

annotations:

#nginx.ingress.kubernetes.io/whitelist-source-range: "60.191.70.64/29, xx.xxx.0.0/16"

nginx.ingress.kubernetes.io/ssl-redirect: 'false'

name: presslab-mysql-operator-ingress

namespace: operator

spec:

tls:

- hosts:

- test-mysql.jiaminxu.com

# secretName: ihaozhuo

rules:

- host: test-mysql.jiaminxu.com

http:

paths:

- path: /

backend:

serviceName: presslabs-mysql-operator

servicePort: 80

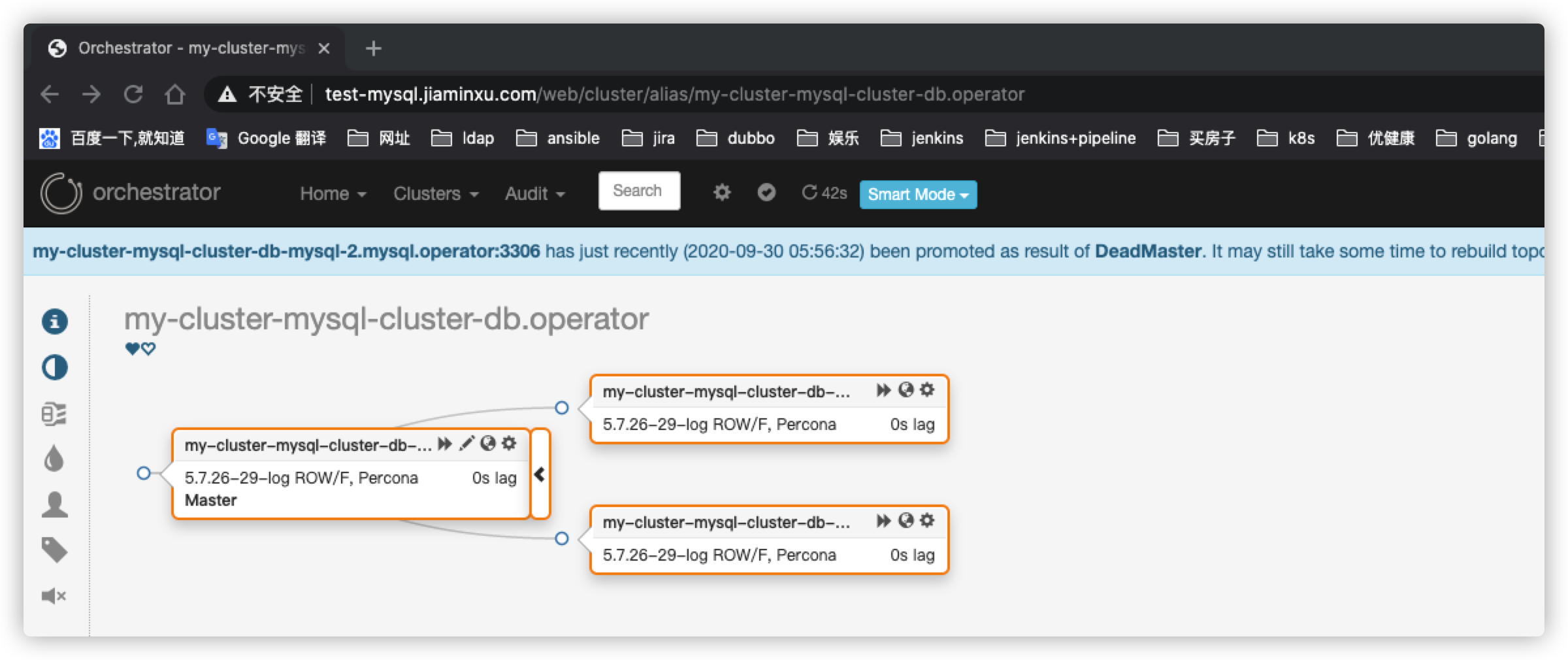

访问自定义的域名

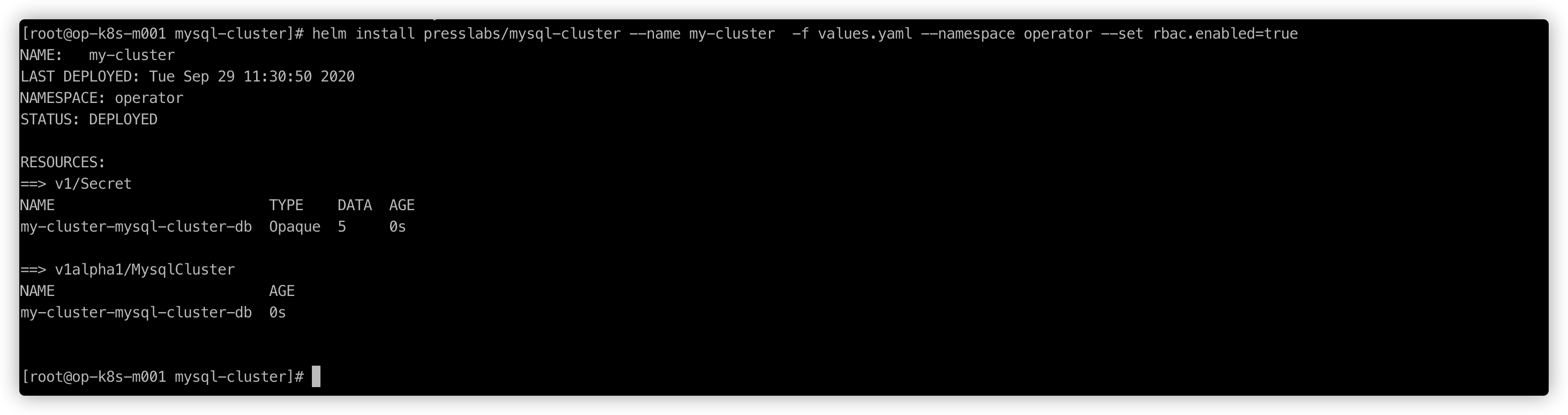

部署Presslabs MySQL Cluster

# cd -

cd mysql-operator/charts/mysql-cluster/

# 修改repilicas的值为3 部署一个3节点的percona mysql集群 并且账号密码之类的需要自行设置!

vi values.yaml

helm install presslabs/mysql-cluster --name my-cluster -f values.yaml --namespace operator --set rbac.enabled=true

# helm delete my-cluster --purge

查看svc

# 查看svc

kubectl get svc -n operator

在节点部署之后,会借助orchestractor进行leader选举,其中my-cluster-mysql-cluster-db-mysql-master相当于当前选举出来的的master节点,可以进行读写操作:

kubectl describe svc my-cluster-mysql-cluster-db-mysql-master -n operator

而my-cluster-mysql-cluster-db-mysql里面是所有的mysql节点,可以用于读操作: 看了ip包含master的ip

kubectl describe svc my-cluster-mysql-cluster-db-mysql -n operator

测试高可用性

如果operator/orchestrator发生故障,如果又恰好是leader,则会重新进行leader选举,比如我将现在的leader presslabs-mysql-operator-2 POD重启,则presslabs-mysql-operator-1成为了新的leader:

理论上整个过程mysql业务集群没有任何感知和影响。 这个感知我没有测试

删除mysql master的pod测试 如图 ip发生了改变

也可以在网页控制台调整 类似如下

此时这个原先的master节点会变成stop 状态 需要点开 ⚙️ start slave

测试删除pod

kubectl delete pod my-cluster-mysql-cluster-db-mysql-2 -n operator

提示master not replicating

这里点开master的⚙️ 然后reset slave 就好了

还在摸索阶段 如果有错误 欢迎指正

参考

https://blog.csdn.net/cloudvtech/article/details/105649723

更多推荐

已为社区贡献52条内容

已为社区贡献52条内容

所有评论(0)