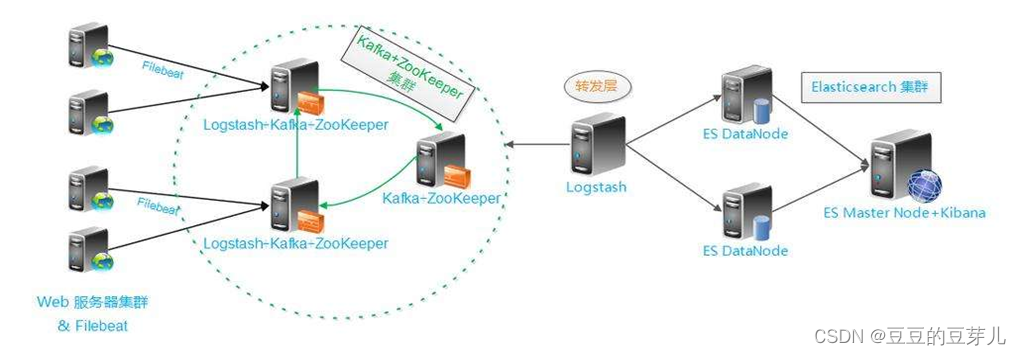

ELK日志采集监控系统架构及流程

项目介绍及软件功能:Filebeat: 部署在各个应用服务器上收取日志信息,简单过滤信息,推送给kafka(Go语言写的)Kafka:部署集群,可以跟logstach,kibana这些部署在一台上也可以单独部署!它主要负责给ES一个缓冲期,减轻压力!存储filebeat发过来的数据,对磁盘有要求!kafka跟另一个Kafka通信是通过zookeeper的,所以安装Kafka前要先安装zookeep

项目介绍及软件功能:

Filebeat: 部署在各个应用服务器上收取日志信息,简单过滤信息,推送给kafka(Go语言写的)

Kafka:部署集群,可以跟logstach,kibana这些部署在一台上也可以单独部署!它主要负责给ES一个缓冲期,减轻压力!存储filebeat发过来的数据,对磁盘有要求!kafka跟另一个Kafka通信是通过zookeeper的,所以安装Kafka前要先安装zookeeper。(scala写的)

Zookeeper:管理kafka集群,一个kafka对应一个zookeeper(java写的)

Elasticsearch:负责存储过滤好的最终数据,logstash发过来的数据(java写的)

Logstash: 负责过滤从Kafka那里获取过来的数据,再把过滤好的数据推送给elasticsearch!(JRuby语言编写)

Kibana: 负责从elasticsearch那里读取数据,然后用图表,可视化的形式展现出来(是一个Web网站,java写的)

安装部署流程:

注意:ELK所有软件都是Java写的所以jdk所有地方都要安装,Elasticsearch,Logstash,Kibana,Filebeat:版本还要对应一样才行!

(1)下载rpm包

官网:https://www.elastic.co/cn/downloads/elasticsearch

官网:https://www.elastic.co/cn/downloads/logstash

官网:https://www.elastic.co/cn/downloads/kibana

官网:http://kafka.apache.org/downloads

官网:https://zookeeper.apache.org/releases.html

官网:https://www.elastic.co/cn/downloads/beats/filebeat

https://www.elastic.co/cn/downloads/past-releases/

(2) 所有机子都要操作的步骤,建立基础环境

编写域名解析文件:

[root@es1 ~] vi /etc/hosts

192.168.10.10 es1

192.168.10.11 es2

192.168.10.12 es3

192.168.10.13 kafka1

192.168.10.14 kafka2

192.168.10.15 kafka3

配置主机名:

[root@es1 ~] echo es1 > /etc/hostname(es1此处需要修改成机子对应名称)

配置IP:

[root@es1 ~] vi /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

BOOTPROTO=static (DHCP改成static)

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_PEERDNS=yes

IPV6_PEERROUTES=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=7aa25025-f28d-49e1-b2b0-80731e7e7569

DEVICE=ens33

ONBOOT=yes(no改成yes)

IPADDR=192.168.10.10(需要修改)

NETMASK=255.255.255.0

GATEWAY=192.168.10.2

DNS1=8.8.8.8

DNS2=119.29.29.29

DNS3=61.139.2.69

把SElinux禁用

[root@es1 ~] vi /etc/sysconfig/selinux

SELINUX=enforcing改为SELINUX=disabled

(注意有其他服务在运行时不能重启机子,可以重启网络服务)

[root@es1 ~] reboot

配置yum源:

[root@es1 ~] yum -y install wget

[root@es1 ~] cd /etc/yum.repos.d/

[root@es1 ~] mkdir ./xxx && mv ./.repo xxx/

[root@es1 ~] wget -O CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-6.repo

[root@es1 ~] yum cleanall && yum makecache

[root@es1 ~] yum -y install vim bash-completion net-tools.x86_64 lrzsz.x86_64

[root@es1 ~] exit

[root@es1 ~] ssh 重新连接

关闭防火墙,生产环境就开放端口

[root@es1 ~] systemctl stop firewalld.service

[root@es1 ~] systemctl disable firewalld.service

开放端口:

[root@es1 ~] firewall-cmd --zone=public --add-port=9200/tcp --permanent

[root@es1 ~] firewall-cmd --reload

安装Elasticsearch:

[root@es1 ~] yum -y install elasticsearch-7.6.1-x86_64.rpm

[root@es1 ~] vim /etc/elasticsearch/elasticsearch.yml

cluster.name: elk (定义集群名称)

node.name: es1 (本机主机名称)

path.data: /data/elk/data (存储数据的目录,需手动创建并赋权)

path.logs: /var/log/elasticsearch (日志目录)

bootstrap.memory_lock: true (内存锁定,防止es使用swap内存)

network.host: 192.168.10.10 (填写本机的IP地址)

http.port: 9200 (服务端口)

discovery.seed_hosts: ["es1", "es2", "es3"] (集群成员,节点太多可以不用全部填写)

cluster.initial_master_nodes: ["192.168.10.10", "192.168.10.11", "192.168.10.12"] (参与选举myster的节点,注意必须填写IP,不能填写主机名)

开启集群的x-pack插件

xpack.security.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /etc/elasticsearch/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /etc/elasticsearch/elastic-certificates.p12

xpack.monitoring.collection.enabled: true

配置内存锁:

[root@es1~] vim /etc/elasticsearch/jvm.options

以下参数

-Xmx1g改为-Xmx13g

-Xms1g改为-Xmx13g

[root@es1~] mkdir /etc/systemd/system/elasticsearch.service.d

[root@es1~] vim /etc/systemd/system/elasticsearch.service.d/override.service

[service]

LimitMEMLOCK=infinity

[root@es1~] cd /etc/systemd/

[root@es1~] chown -R elasticsearch:elasticsearch system/elasticsearch.service.d/

2: 配置证书,如下操作在其中一个node节点执行即可,生成完证书传到集群其他节点

[root@es1~] /usr/share/elasticsearch/bin/elasticsearch-certutil ca

[root@es1 ~] /usr/share/elasticsearch/bin/elasticsearch-certutil cert --ca elastic-stack-ca.p12

(以上两个命令执行后一路回车,完成后会生成2个文件elastic-certificates.p12和elastic-stack-ca.p12,文件放在执行命令的当前路径下或者是在/usr/share/elasticsearch/

3: 把这两个文件移动到/etc/elasticsearch/

[root@es1 ~] mv /usr/share/elasticsearch/elastic-* /etc/elasticsearch/

[root@es1 ~] chown -R elasticsearch:elasticsearch /etc/elasticsearch/

[root@es1 ~] mkdir -p /data/elk/data

[root@es1 ~] chown -R elasticsearch:elasticsearch /data/elk/

[root@es1 ~] systemctl start elasticsearch

[root@es1 ~] systemctl status elasticsearch

如果服务没起起来要去查看日志里的报错信息:tail -100 /var/log/elasticsearch/elk.log

4: 创建各个用户的密码,密码全部填写一样的,后面都要用!

[root@es1 ~] /usr/share/elasticsearch/bin/elasticsearch-setup-passwords interactive

Initiating the setup of passwords for reserved users elastic,apm_system,kibana,logstash_system,beats_system,remote_monitoring_user.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]y

Enter password for [elastic]: 123456

Reenter password for [elastic]: 123456

Enter password for [apm_system]: 123456

Reenter password for [apm_system]: 123456

Enter password for [kibana]: 123456

Reenter password for [kibana]: 123456

Enter password for [logstash_system]: 123456

Reenter password for [logstash_system]: 123456

Enter password for [beats_system]: 123456

Reenter password for [beats_system]: 123456

Enter password for [remote_monitoring_user]: 123456

Reenter password for [remote_monitoring_user]: 123456

出现以下内容说明设置成功:

Changed password for user [apm_system]

Changed password for user [kibana]

Changed password for user [logstash_system]

Changed password for user [beats_system]

Changed password for user [remote_monitoring_user]

Changed password for user [elastic]

安装zookeeper:

注意,先把zookeeper和kafka的软件包上传到/root下

格式化磁盘,文件系统为xfs

[root@kafka1 ~] mkfs.xfs /dev/sdb

挂载磁盘到/data/目录下

[root@kafka1 ~] mount /dev/vdb /data/

配置开机自动挂载

[root@kafka1 ~] echo “/dev/vdb /data xfs defaults 0 0” >> /etc/fstab

创建zookeeper的存储目录data,及日志目录logs

[root@kafka1 ~] mkdir /data/zookeeper/zk0/{data,logs} -p

解压apache-zookeeper-3.6.1-bin.tar.gz这个zookeeper的安装软件包

[root@kafka1 ~] tar zxvf apache-zookeeper-3.6.1-bin.tar.gz

把zxvf apache-zookeeper-3.6.1-bin.tar.gz解压出来的目录移动到/usr/local/下

[root@kafka1 ~] mv apache-zookeeper-3.6.1-bin /usr/local/

进入到zookeeper的配置文件所在路径下

[root@kafka1 ~] cd /usr/local/apache-zookeeper-3.6.1-bin/conf/

备份一个配置文件,命名为zoo_sample.cfg.backup

[root@kafka1 ~] mv zoo_sample.cfg zoo_sample.cfg.backup

复制配置文件命名为zoo.cfg

[root@kafka1 ~] cp zoo_sample.cfg.backup zoo.cfg

修改配置文件

[root@kafka1 ~] vim zoo.cfg

tickTime=2000 (默认)

initLimit=5 (修改为5)

syncLimit=2 (修改为2)

dataDir=/data/zookeeper/zk0/data (快照存储目录修改为/data/zookeeper/zk0/data,需要手动创建,上面已操作过创建命令)

dataLogDir=/data/zookeeper/zk0/logs (日志目录修改为/data/zookeeper/zk0/logs,需要手动创建,上面已操作过创建命令)

clientPort=2181 (默认端口)

server.1=kafka1:2888:3888 (kafka的服务器主机名及通信端口)

server.2=kafka2:2888:3888 (kafka的服务器主机名及通信端口)

server.3=kafka3:2888:3888 (kafka的服务器主机名及通信端口)

[root@kafka1 ~] echo 1 > /data/zookeeper/zk0/data/myid (1这个参数每台需要不一样,按照整数顺序来,这个是zookeeper的身份证号)

[root@kafka1 ~] touch /data/zookeeper/zk0/data/initialize (创建初始化标志文件)

调整java堆大小

[root@kafka1 ~] vim /usr/local/apache-zookeeper-3.6.1-bin/bin/zkServer.sh

28行:ZOOBINDIR="(cd"(cd "(cd"{ZOOBIN}"; pwd)"下增加内容

SERVER_JVMFLAGS=” -Xmx2g -Xms2g”

开启服务

[root@kafka1 ~] /usr/local/apache-zookeeper-3.6.1-bin/bin/zkServer.sh start

停止服务

[root@kafka1 ~] /usr/local/apache-zookeeper-3.6.1-bin/bin/zkServer.sh stop

重启服务

[root@kafka1 ~] /usr/local/apache-zookeeper-3.6.1-bin/bin/zkServer.sh restart

[root@kafka1 ~] /usr/local/apache-zookeeper-3.6.1-bin/bin/zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /usr/local/apache-zookeeper-3.6.1-bin/bin/…/conf/zoo.cfg

Client port found: 2181. Client address: localhost.

Mode: follower(从),要是主的话这个位置显示的是(leader)

服务状态不是显示以上内容的话请查看日志里有没有WARN或者error字样,根据日志处理

vim /usr/local/apache-zookeeper-3.6.1-bin/logs/zookeeper-root-server-kafka3.out

进入zookeeper控制台

[root@kafka1 ~] /usr/local/apache-zookeeper-3.6.1-bin/bin/zkCli.sh -server 127.0.0.1:2181

退出控制台

[zk: 127.0.0.1:2181(CONNECTED) 0] quit

安装kafka:

[root@kafka1 ~] mkdir /data/kafka-logs

解压kafka安装软件包(注意必须在软件包所在路径下,否则打绝对路径

[root@kafka1 ~] tar zxvf kafka_2.12-2.6.0.tgz

移动解压出来的目录文件kafka_2.12-2.6.0到/usr/local/下

[root@kafka1 ~] mv kafka_2.12-2.6.0 /usr/local/

修改kafka配置文件

[root@kafka1 ~] vim /usr/local/kafka_2.12-2.6.0/config/server.properties

21行: broker.id=0 #(kafka的身份证号,按整数顺序填写可以从0开始)

31行: listeners=PLAINTEXT://192.168.10.15:9092 #(填写本机IP)

42行: num.network.threads=3

45行: num.io.threads=4

48行: socket.send.buffer.bytes=102400

51行: socket.receive.buffer.bytes=102400

54行: socket.request.max.bytes=104857600

60行: log.dirs=/data/kafka-logs

65行: num.partitions=1

69行: num.recovery.threads.per.data.dir=1

74行: #offsets.topic.replication.factor=1 #(注释掉)

75行: #transaction.state.log.replication.factor=1 #(注释掉)

76行: #transaction.state.log.min.isr=1 #(注释掉)

103行:log.retention.hours=168

110行:log.segment.bytes=536870912 #(修改为1024的倍数即可)

114行:log.retention.check.interval.ms=300000

123行:zookeeper.connect=kafka1:2181,kafka2:2181,kafka3:2181 #(填写全部zookeeper的主机名和端口)

126行:zookeeper.connection.timeout.ms=6000

#在末尾增加以下数据,最后一行如果默认开启请注释掉

default.replication.factor=3

auto.create.topics.enable=false

min.insync.replicas=2

queued.max.requests=1000

controlled.shutdown.enable=true

delete.topic.enable=true

启动命令

[root@kafka1~] /usr/local/kafka_2.12-2.6.0/bin/kafka-server-start.sh -daemon /usr/local/kafka_2.12-2.6.0/config/server.properties

查看日志文件看看有没有WARN或者error字样,有就说明服务没启动成功,需要处理问题

[root@kafka1~] tail -100 /usr/local/kafka_2.12-2.6.0/logs/server.log

安装logstash:

[root@kafka1~] yum -y install logstash-7.8.0.rpm

[root@kafka1~] mkdir /data/logstash

[root@kafka1~] vim /etc/logstash/logstash.yml

19行: node.name: kafka1 (节点名称,随便填)

28行: path.data: /data/logstash (数据存储目录)

67行: pipeline.ordered: auto

73行: path.config: /etc/logstash/conf.d(使用子目录配置作为数据流配置)

241行:path.logs: /var/log/logstash (日志存储位置)

开启xpack监控Logstash配置

256行:xpack.monitoring.enabled: true

257行:xpack.monitoring.elasticsearch.username: logstash_system

258行:xpack.monitoring.elasticsearch.password: "123456" (之前安装elasticsearch时设的密码)

259行:xpack.monitoring.elasticsearch.hosts: ["http://es1:9200", "http://es2:9200", "http://es3:9200"] (填写elasticsearch服务器的主机名和,IP和端口,注意默认是https要改为http)

创建topic,注意topic名称在filebeat配置文件中,和在/etc/logstash/conf.d下的配置文件中的topic这三个地方的要一样

[root@kafka1~] /usr/local/kafka_2.12-2.6.0/bin/kafka-topics.sh --create --bootstrap-server kafka3:9092 (这里是填写kafka服务器的主机名和端口)–replication-factor 2 --partitions 2 --topic filebeats(topic的名字,可以随便写)

[root@kafka1~] /usr/local/kafka_2.12-2.6.0/bin/kafka-topics.sh --list --bootstrap-server kafka3:9092

显示以下内容说明创建成功

filebeats

编写配置文件在/etc/logstash/conf.d/

[root@kafka1~] vim /etc/logstash/conf.d/filebeats.conf

input{

kafka{

bootstrap_servers => ["kafka1:9092,kafka2:9092,kafka3:9092"]

topics => "filebeats"

codec => json

consumer_threads => 2

}

}

output{

elasticsearch{

hosts => ["es1:9200","es2:9200","es3:9200"]

user => "elastic"

password => "123456"

manage_template => true

index => "filebeat-7.8.1-%{+YYYY.MM.dd}"

}

}

[root@kafka1~] systemctl start logstash

[root@kafka1~] systemctl enable logstash

[root@kafka1~] systemctl status logstash

注意:没启动成功请查看日志vim /var/log/logstash/logstash-plain.log里的报错信息

安装部署kibana:

[root@kafka1~] yum -y install kibana-7.6.1-x86_64.rpm

[root@kafka1~] vim /etc/kibana/kibana.yml

2行:server.port: 5601 (默认)

7行:server.host: "192.168.10.15" (本机IP)

25行:server.name: "kafka3" (本机主机名)

28行:elasticsearch.hosts: ["http://192.168.10.10:9200"](随便填一台elasticsearch服务器 IP和端口)

37行:kibana.index: ".kibana"(es存储的kibana索引名)

46行:elasticsearch.username: "kibana"(从ES里获取数据的用户名,后面要设置,要对应)

47行:elasticsearch.password: "123456"(从ES里获取数据的用户名的密码,之前在安装ES时设置了的)

115行:i18n.locale: "zh-CN" (控制面板按中文显示)

在末尾添加以下内容:

xpack.reporting.encryptionKey: "a_random_string"

xpack.security.encryptionKey: "something_at_least_32_characters"

[root@kafka1~] /usr/share/kibana/bin/kibana-keystore --allow-root create

A Kibana keystore already exists. Overwrite? [y/N] y

Created Kibana keystore in /var/lib/kibana/kibana.keystore

[root@kafka1~] /usr/share/kibana/bin/kibana-keystore --allow-root add elasticsearch.username

Enter value for elasticsearch.username: kibana (此处填写的用户名要与配置文件里相对应)

[root@kafka1~] /usr/share/kibana/bin/kibana-keystore --allow-root add elasticsearch.password

Enter value for elasticsearch.password: ****** (这里填写之前在安装ES时设置过kibana的密码)

[root@kafka1~] systemctl start kibana

启动失败,出错时请查看系统日志,ES日志,logstash日志

安装部署:filebeat

[root@kafka1~] yum -y install filebeat-7.8.1-x86_64.rpm

[root@kafka1~] vim /etc/filebeat/filebeat.yml

15行:filebeat.inputs:

21行:- type: log

24行: enabled: true

27行: paths:

28行: - /var/log/messages(要获取的日志的路径)

67行:filebeat.config.modules:

69行: path: ${path.config}/modules.d/*.yml

72行: reload.enabled: false

79行:setup.template.settings:

80行: index.number_of_shards: 3

99行:fields:

100行: ip: 192.168.10.13

118行:setup.kibana:

160行:output.kafka:

161行: enabled: true

162行: hosts: ["192.168.10.13:9092","192.168.10.14:9092","192.168.10.15:9092"]

163行: topic: "filebeats" (这个名称要跟kafka创建时的一致,还有logstash写的配置文件里的一致)

164行: compression: gzip

165行: max_message_bytes: 1000000

185行:processors:

186行: - drop_fields:

187行: fields: ["agent.ephemeral_id", "agent.hostname", "agent.id", "agent.type", "agent.version", "ecs.version", "event.code", "event.created", "event.kind", "event.provider", "host.architecture", "host.id", "host.name", "host.os.build", "host.os.family", "host.os.kernel", "host.os.platform", "host.os.version", "process.name", "user.domain", "winlog.activity_id", "winlog.api", "winlog.computer_name", "winlog.event_data.CallerProcessld", "winlog.event_data.SubjectDomainName", "winlog.event_data.SubjectLogonld", "winlog.event_data.SubjectUserName", "winlog.event_data.SubjectUserSid", "winlog.event_data.TargetDomainName", "winlog.event_data.TargetSid", "winlog.event_data.TargetUserName", "winlog.logon.id", "winlog.opcode", "winlog.process.pid", "winlog.process.thread.id", "winlog.provider_name", "winlog.record_id"]

189行: ignore_missing: false (要跟上面的fields相对应)

190行:logging.level: info

191行:monitoring.enabled: false

[root@kafka1~] filebeat export template > filebeat.template.json (这里是导出的文件名称,只要以.json结尾就行)

[root@kafka1~] curl -u elastic:123456 -XPUT -H ‘Content-Type: application/json’ http://es1:9200/_template/filebeat-7.8.1 -d@filebeat.template.json

注意:这条命令是把数据传送给ES的,账号密码,主机名,版本,文件名不一样的要记得修改

[root@kafka1~] filebeat setup -e -E output.elasticsearch.hosts=[es1:9200] -E output.elasticsearch.username=elastic -E output.elasticsearch.password=123456 -E setup.kibana.host=kafka1:5601 -E output.logstash.enabled=false

[root@kafka1~] systemctl start filebeat

[root@kafka1~] systemctl status filebeat

启动失败,出错时请查看系统日志,ES日志,logstash日志

登录kibana创建索引:

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)